European Journal of Taxonomy

2017 (283) - Pages 1-25 (EJT-283)

European Journal of Taxonomy

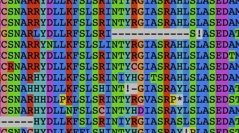

2017 (283) - Pages 1-25 (EJT-283)In the mid-2000s, molecular phylogenetics turned into phylogenomics, a development that improved the resolution of phylogenetic trees through a dramatic reduction in stochastic error. While some then predicted “the end of incongruence”, it soon appeared that analysing large amounts of sequence data without an adequate model of sequence evolution amplifies systematic error and leads to phylogenetic artefacts. With the increasing flood of (sometimes low-quality) genomic data resulting from the rise of high-throughput sequencing, a new type of error has emerged. Termed here “data errors”, it lumps together several kinds of issues affecting the construction of phylogenomic supermatrices (e.g., sequencing and annotation errors, contaminant sequences). While easy to deal with at a single-gene scale, such errors become very difficult to avoid at the genomic scale, both because hand curating thousands of sequences is prohibitively time-consuming and because the suitable automated bioinformatics tools are still in their infancy. In this paper, we first review the pitfalls affecting the construction of supermatrices and the strategies to limit their adverse effects on phylogenomic inference. Then, after discussing the relative non-issue of missing data in supermatrices, we briefly present the approaches commonly used to reduce systematic error.

Phylogenomics, supermatrix, systematic error, data quality, incongruence.